007: Disaster Recovery Architecture on AWS

Creating robust and resilient high-availability solutions on AWS.

Thank you for joining us for today’s 5 Minute Tech Challenge! We’re so glad you’re a part our community. Today, we have Alex Lundberg, Senior DevOps Engineer at Capital One, taking us on an architectural journey. Over to you, Alex!

AWS provides critical services for developers, and each service offers various options and configurations for achieving high availability solutions. One fundamental concept taught to all AWS learners is the use of availability zones, which are network-partitioned segments of the AWS datacenter for deploying applications. Deploying applications across multiple availability zones protects them from sudden outages in a single zone. However, what if an entire region fails? Though regional outages are less frequent, they still pose a risk for applications that must always be available. In this discussion, we will explore common regional architecture patterns and failover strategies while outlining a framework to examine their tradeoffs.

Do you really need cross-region high availability?

Before delving into high-availability needs, it's essential to understand the service level agreements (SLAs) of your application. If your application's SLA requires uptime of 99.99% or less, consider whether a regional architecture is necessary. AWS publishes its SLAs here. Currently, the region-level SLA for running instances in two or more availability zones guarantees 99.99% uptime, translating to 4 minutes and 21 seconds of allowed downtime per month.

Architecting an application for high availability across availability zones, supported by a load balancer, is relatively straightforward, with numerous AWS resources to guide you through the process. However, proceed cautiously and assess whether regional availability is genuinely required, as it generally incurs higher costs and demands more engineering efforts for development, validation, and maintenance.

What disaster recovery method should I use?

If a regional application is deemed necessary, the next step is to decide on the disaster recovery scenario to implement. There are four main options: backup and restore, pilot light, warm standby, and multi-site active/active. Each option varies in complexity, cost, recovery time objective (RTO), and recovery point objective (RPO). AWS offers a valuable resource to discuss these tradeoffs.

Option 1: Backup & Restore

The backup and restore cross-region plan is the simplest. It is suitable when your application can endure a service outage lasting at least as long as the full backup restore. Utilizing AWS Backup is recommended for this option. By creating a backup plan and enabling cross-region replication, you can copy backups across regions. For automatic restoration, a custom Lambda function triggered by a CloudWatch alarm monitoring your application can restore it from the AWS Backup in the desired region.

This solution offers relative ease of implementation and provides regional reliability for a wide range of AWS services. However, its drawbacks include dependency on AWS Backup support for your specific service, ensuring the backup plan cadence aligns with your RPO, and keeping the time for backup and restore within your application's RTO. This option is suitable for non-critical applications requiring non-time-sensitive data replication and recovery across regions. For slightly more time-sensitive applications, consider combining it with an AWS Lambda and CloudWatch alarm to trigger a restore.

Option 2: Warm-Standby

The second solution for regional high availability is the warm-standup setup. In this approach, your application's minimal version runs in another region. For example, if your application is a container in ECS fronted by a load balancer, you would replicate this setup in the second region, with an ECS autoscaling policy set to a minimum of one container. Upon a failover event, the application in the secondary region receives traffic and triggers the scaling policy.

Compared to the backup and restore method, this solution offers faster recovery time but comes with increased costs. You need to maintain a minimum version of your application in the secondary region, and some components may require fully provisioned instances, adding to the expenses. Moreover, the application should be able to handle sudden load surges during failover. Thoroughly test the autoscaling configuration to avoid issues during actual failover events. Additionally, ensure the database instance in the secondary region can scale out quickly, or provision a large instance to accommodate the load during failover.

Option 3: Pilot Light

The third solution is the pilot light, which differs from the warm standby as it does not provision the entire application, only the components necessary for data and object storage. For example, in the ECS container scenario, the number of live ECS containers in the secondary region would be zero, and the load balancer for the empty ECS service would not exist. However, the PostgreSQL replica would remain active and receive data from the primary region. While warm standby can handle reduced requests and scale up as needed, the pilot light requires full provisioning of the load balancer and ECS service before it can serve requests. The pilot light offers reduced costs compared to warm standby, with the same RPO, but at the cost of a larger RTO. This option is ideal when optimizing costs while accepting a longer recovery time.

Option 4: Multi-Site

The final solution for regional availability is the multi-site approach. In this architecture, the application is fully replicated in a separate region, and both regions remain active. This solution is similar to warm standby but offers a lower recovery time since it doesn't require additional resource scaling during failover. However, it is considerably more expensive, making it suitable for mission-critical services.

How would this look for my application?

Once the regional failover solution is chosen, the next step is to architect its management. In the following examples, we assume a multi-site setup for simplicity.

Let's consider a containerized microservice hosted in ECS, fronted by a load balancer, and with a PostgreSQL backend. To achieve region resilience in an active/active setup, replicate this setup in a second region. Update the primary region's hostname in Route53 with a failover routing policy, pointing to the secondary region's load balancer as the secondary record. Now, if the Route53 health check fails, the routing policy automatically directs traffic to the secondary load balancer, enabling access to the failover application. For more details, see this guide.

However, there is a crucial step missing in this setup. While the secondary application can handle requests successfully, writes to the database would fail since the secondary region only serves as a read replica of the primary region. You must promote this replica to the primary instance. To automate this process during each failover, you can write a Lambda function that executes when the DNS failover occurs in Route53.

How do I replicate the data-layer?

The data layer represents the most challenging aspect of a multi-region application. In our example application, the simplest solution is to explore data services that offer automated regional failover capabilities. If you are using a PostgreSQL database, consider switching to Aurora Global Database, a service that enables a single database to span multiple regions. Aurora Global Database automates the failover process between regions in less than a minute, ensuring high availability.

AWS offers various global datastore service options for different needs: DynamoDB, Redis, Aurora, or S3.

Alternatively, if no native AWS service exists for managing regional failover of the data layer, you may need to perform the failover steps manually or develop custom Lambda functions triggered by application health-related EventBridge events.

If your datastore requires a custom failover solution, which can often be the case for datastores with strong ACID guarantees such as relational databases, expect a fair amount of testing and maintenance to ensure this solution remains reliable. If you do this operation through a lambda function, consider running it from the secondary region, so that if your primary region becomes completely inaccessible, you can still failover.

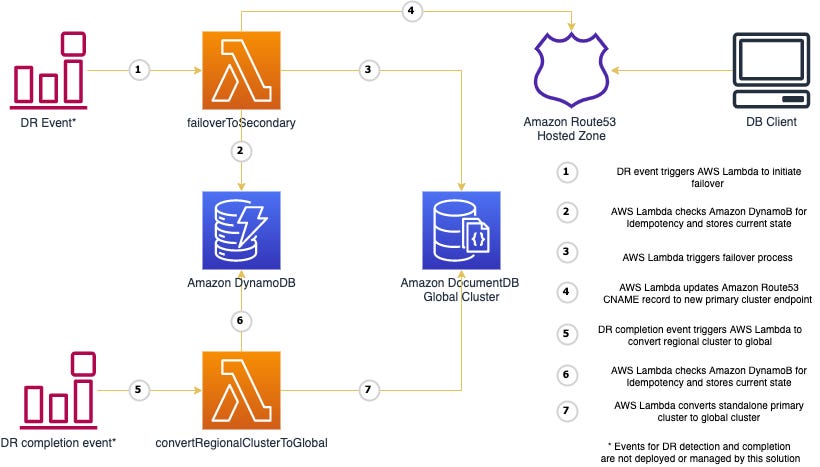

For example, you can refer to AWS's provided tool for performing documentDB failover. This tool demonstrates two Lambdas: one for executing the failover and another for reversing the failover once disaster recovery is complete. Since each application has unique failover requirements, the tool doesn't offer a predefined disaster recovery event. To adapt this pattern to other datastores lacking a native global solution, create a CloudWatch event that monitors your application's health and triggers a Lambda to execute the failover steps specific to your datastore.

Key Takeaways

To summarize the main points of high-availability cross-region architecture:

Evaluate the necessity of a multi-region application, considering SLAs and cost implications.

Understand the data layer and utilize AWS-provided global solutions when possible.

Learn how to create warm-standby, pilot light, or active/active setups using Route53 failover records.

Develop custom solutions using AWS Lambda and CloudWatch for handling data layer failovers, if necessary.

By following these principles, you can create robust and resilient high-availability solutions on AWS.

Coming up in two weeks, we will be learning from Cassidy Williams, a developer advocate, educator, advisor, software engineer, and memer. Cassidy will be sharing her knowledge on Team Knowledge Sharing (#meta). Maybe we’ll end up learning a few tips and tricks to improve these posts!