Thank you for joining us for today’s 5 Minute Tech Challenge! We’re so glad you’re a part our community. Today, we have Sascha Strand, a Payments Platform Software Engineer at Google, teaching us about protocol buffers. Over to you, Sascha!

Some data architecture choices require predicting the future. How will the company's tech stack evolve? What new tools will we want to leverage down the line? The question of how to format data for transmission and storage is one of those kinds of problems. The article aims to explore the question by looking into the technology trends of the past to give us some hope for understanding technology trends of the future.

Part I: What came before JSON

The problem of how to transmit and store a collection of related pieces of data--for example, experiment date, results, etc--goes back to the earliest commercial programming language, FORTRAN. The language’s initial 1958 release included a keyword FORMAT that defined a relationship between data elements of different types when stored on external media. A FORMAT statement could be run anywhere in the program and then referenced as part of an I/O command like READ or PRINT.1

The formatting language was wildly concise (and way outside the scope of this article), but the following code snippet reads three variables A, B, C. Using the FORMAT instance FMT, A and B are each assigned an integer and C an array of integers. The data could be stored in a new-line delimited file (also called a record), where one record corresponded to one instance of the FORMAT pattern.

One upside of this is that, when printed, it was human readable. In any media, the output was written as characters. It was even possible to include something like a header and to line wrap. It was also easy to share--the data was consumable independent of the FORMAT statement.

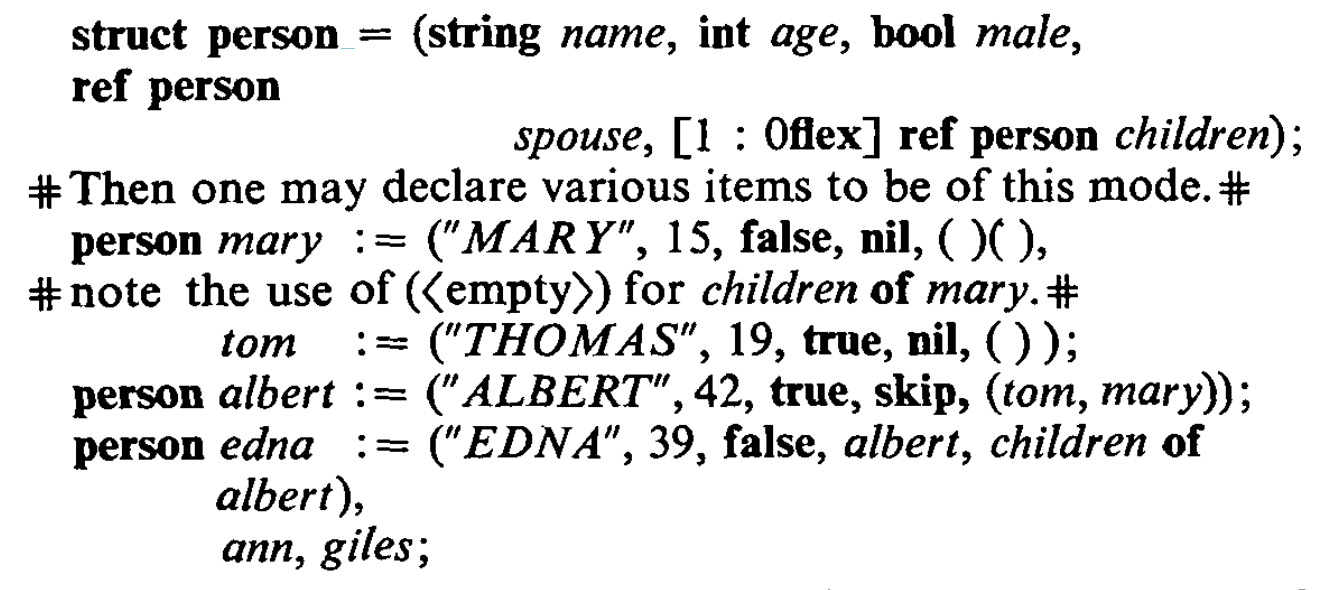

Just ten years after the first release of FORTRAN, an international research group based in Austria released ALGOL 68. The language was complex and slow to be adopted, but buried in that complexity were innovative new language features, one of which, the struct, addressed the problem of structured data head-on. In his widely-circulated 1971 article ALGOL 68 with Fewer Tears, ALGOL developer Charles Lindsey introduces the concept, “A ‘structure’ is a set of values of various types that are associated together as a unit that may be handled like any other value (e.g. it may be assigned to other similar structures).”2

The syntax looks familiar to an object-oriented programmer and the abstraction directly inspired the C struct a decade later.3

While FORTRAN was developed specifically for the IBM 704 computer, development of ALGOL 68 was started by mathematicians in the 1950s, many of whom lived in Europe at a time when there were no particularly powerful computers on the continent.

Later writing for an ACM journal, computer scientist Maurice Wilkes described the commercially-minded FORTRAN developers' view of their abstraction-focused ALGOL counterparts. “As far as computing went, they saw themselves as practical people solving real problems and felt a distrust for those whom they regarded as having their heads in the clouds.”4

FORTRAN’s FORMAT statements and human-readable, new-line delimited file format solved one problem well--how to store and transfer heterogenous data with the technology at hand. It was simple to understand and work with. It was durable (even if the punch cards holding your data were shuffled or the tape cut in half, you could still parse and read each piece) and flexible (you could write a file of encoded data by hand if the occasion arose).

On the other hand, ALGOL 68 offered an abstraction so powerful, the struct, that it is still used in many languages today. With a single data type that encapsulated all its data members, the struct was considerably easier for a developer to use. By allowing for storage in a binary format, values like multi-digit numbers could be more compactly stored without the need for any explicit compression. And if one wanted to do some compression--or encryption or annotation or modify the data in any way--that step could be added to the I/O without the application layer being any the wiser. By giving up human readability of the encoded files, a huge amount was gained.

Part II: Two Paths Emerge

The tradeoffs and occasional tension between FORTRAN and ALGOL 68’s approaches to structured data remains a half-century later between JSON and Protocol Buffers.

JSON takes existing technologies--the JavaScript language and web browser--and offers a way for “practical people solving real problems”, as Wilkes put it, to store and move structured data in that domain. Just like FORTRAN’s FORMAT statements, it’s easy to understand and work with. It’s durable and flexible.

Also just like FORTRAN’s FORMAT statements, JSON takes up considerably more space than necessary. And while it is easy for a developer to get started using it, a lot of boilerplate code is necessary for day-to-day reading and writing across services, especially when working in a multi-language environment. 5

Protocol Buffers address every one of these downsides. Like the ALGOL 68 structure, they introduce a powerful abstraction that requires added effort and overhead to implement initially into a system.

This article contends that both are valuable and both have evolved from legitimate, competing needs. We need software that can be developed quickly using frameworks tailor-made to the domain of the moment and for software that is extensible and performant, even at a higher development cost.

This article also contends that there is a reason structs are with us today and FORTRAN’s FORMAT statements faded into the background.6 Good abstractions live long lives.

Part III: Two Paths Continue

Like JSON, Protocol Buffers (“protobufs”) can represent deeply nested structures of data. Unlike JSON, the definition of the object must be defined ahead of time in a dedicated language (the proto language). The proto compiler can then generate library code in any of several languages (Java, Python, C++, Go etc) that contains an object representing this structure. Because the class definition is separate from the data, field names and enum values can be stored in a protobuf instance as numbers.

Several recent articles compare the serialization and deserialization speeds of Protocol Buffers and JSON objects and consistently observe protobufs running faster. In benchmark tests by Auth0 R&D engineer Bruno Krebs, Protocol Buffers could be read onto and off of a network consistently faster than JSON. A separate experiment done by github user nilsmagnus showed that for a JSON object consisting of integers, floats, and small strings with moderately sized names, the conversion to a protobuf saved 60-75% of the space used for the same JSON object.7 His results suggest that Kreb’s speed improvements are due mostly to the fact that Protocol Buffers are simply smaller.

Part IV: Best Practices and Personal Experience

At Google, Protocol Buffers are the standard for all manner of data storage and transfer. They’re used between the front-end and back-end of web applications. They are used to store data at rest in flat files. They are used to define the schemas of relational databases. Just about everywhere there is structured data there are Protocol Buffers. Google publishes a helpful, prescriptive list of best practices that will steer even the newest user onto a safe course.

I used both JSON and Protocol Buffers when I worked at the fintech startup CommonBond, and in that fast-paced, smaller-scale environment, Protocol Buffers did not seem worth the added complexity. It was great to have custom data types and enums in the data. We certainly didn’t complain about I/O performance benefits. But time to launch was almost always our highest priority.

Also, one design decision was made early on that cost a lot of developer toil later. We compiled the proto definition alongside the application binary. This meant that every time we released a new version of the proto definition, if we wanted a binary to have that version, we would need to re-release the binary. For data management applications that didn’t need to know the proto definition at the application level, but still needed the most up-to-date proto definition to retrieve the data, these releases added some significant toil.

Assuming CI/CD is too heavy of a lift, we could have addressed this problem in a number of ways. We could have stored the generated proto library code in a flat file or even our version control system and then pulled it into the running binary on-demand or on a schedule.

Protocol Buffers do require management of generated code, special build steps, and one way or another, sharing the definition of a structured data object along with the data. They are not human readable at rest. You cannot reasonably write or edit them by hand. And while a field can be deprecated, actually removing it forces a team to abandon any data that corresponds to it. If you change your proto definition a lot, your files may fill with echoes of bygone data models.

JSON is an awesome tool. Protocol Buffers are as well, and they provide not just a tool but an abstraction separating the definition of structured data from the data itself. In between the layers of that abstraction all manners of work can be done (encryption, compression, annotation, etc) and the implementation at the bottom of the abstraction is free to silently change across programming languages. I do not think Protocol Buffers will replace JSON, but I wonder if the abstraction they offer might outlive it.

Coming up in two weeks, we will be learning from Jared Lerner, a lifelong Support Engineer and ZzZEO of Nappr, a marketplace that enables people to find flexible and affordable accommodations at nearby hotels to catch up on much-needed rest. Jared will be sharing his learnings on What Not To Do When Starting Your Own Tech Company. I’m assuming rule #1 is something about hockey sticks. Or was it rocketships?

https://www.bell-labs.com/usr/dmr/www/chist.html s.v. “Embryonic C”

Assuming you want to parse your JSON object into a class or similar structure.

Even within the FORTRAN language itself! By the FORTRAN 77 release, two new features, RECORD and, yes, STRUCT provided other tools for structuring heterogenous data with different tradeoffs. https://docs.oracle.com/cd/E19957-01/805-4939/6j4m0vnb1/index.html

When compressing the JSON and proto objects, the savings decreased to 14-48% space savings.